Researchers are not short of qualitative data. Interviews, open-ended surveys, diary studies and usability sessions generate more material than most teams can realistically work through. The constraint is time and attention. Making sense of large, unstructured datasets in ways that are defensible and methodologically sound has previously been slow and demanding. The use of AI tools to streamline qualitative analysis has become more necessary as the volume of data increases over time.

This guide looks at the AI tools researchers are using for qualitative analysis, what they are genuinely useful for, where their limits appear, and which options are suitable for real research work rather than early exploration or convenience tasks.

AI tools for qualitative analysis

AI tools used in qualitative analysis are often grouped together, but they behave very differently in practice. Some are general-purpose language models that researchers adapt for analysis. Others are long-standing qualitative software tools, such as NVivo and Atlas.ti, that have limited automation added on. A smaller group are built specifically around qualitative research workflows from the outset.

These differences affect how data is handled, how insights are produced, and how much trust researchers can place in the outputs. The sections below look at each type of tool in turn, focusing on how they perform in real qualitative research settings, especially where data sensitivity, auditability and analytical rigour matter.

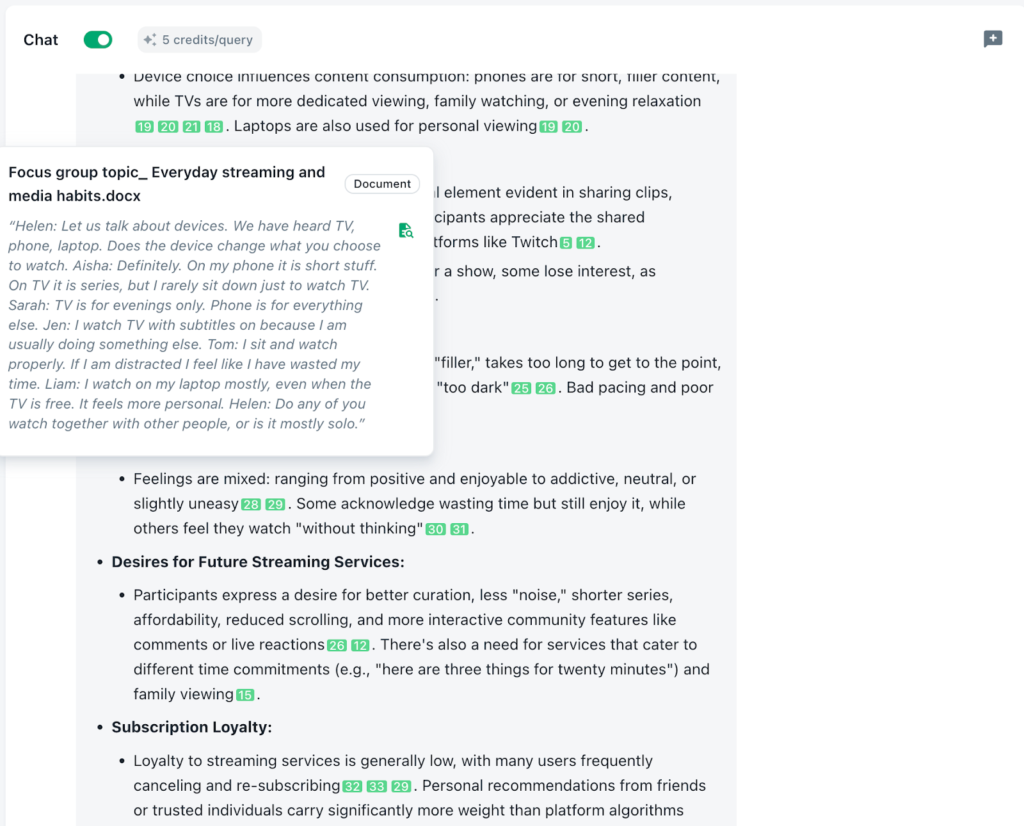

1) Beings

Best for sovereign, research-grade qualitative analysis

Beings is a qualitative analysis platform built specifically for research teams working with sensitive data, regulated clients, and complex qualitative datasets. It is not a general-purpose AI tool adapted for research use. Data control, privacy, and analytical rigour are treated as core architectural decisions.

The platform is designed for researchers who need to work across large volumes of qualitative data without losing visibility of where that data is processed or how insights are formed. This makes Beings particularly well-suited to UK research agencies and teams operating in regulated environments where public cloud AI tools are often restricted or excluded.

Why Beings is different

Beings approaches AI as part of the research workflow, not as a standalone assistant. Its AI research partner, Aida, works only with uploaded project data and is designed to behave like a senior qualitative analyst. Analysis stays grounded in the project corpus, with outputs that remain traceable to source material and easier to defend.

Data handling is explicit rather than abstracted away. Teams can control how AI models interact with their data, with UK-sovereign processing as the default and safeguards in place to prevent sensitive information being used or moved without consent. This keeps risk visible and manageable across research teams.

Beings is built to support judgement rather than replace it. The platform is designed to surface patterns, contradictions, and minority views across datasets, helping researchers focus on what matters while keeping interpretation firmly in human hands.

2) ChatGPT

General-purpose AI used for exploratory qualitative tasks

ChatGPT is a general-purpose AI tool that many researchers will turn to when they are under time pressure. It is commonly used to summarise transcripts, explore early themes, rephrase quotes, or sense-check emerging ideas. That use is understandable. The tool is fast, accessible, and produces outputs that often sound coherent and confident. It is not, however, designed around qualitative research workflows.

ChatGPT works through isolated, prompt-based interactions rather than structured projects, although this can be set up in the correct plan and interface. While it can process large amounts of text, it does not maintain stable links between raw data, themes, and conclusions. There are no built-in audit trails, coding structures, or ways to work systematically across a corpus over time. Any insight it produces exists outside the research process and has to be manually re-grounded in the data before it can be treated as evidence.

There are also practical considerations around data handling. ChatGPT is a public, cloud-based system, and research material entered into it is processed within a third-party infrastructure that researchers do not directly control. Even on paid plans where model training may be disabled, data still moves through systems that are not designed with qualitative research governance in mind. For teams working with participant data or client-commissioned insight, this creates gaps in visibility and accountability.

This means that in practice, ChatGPT can technically be useful for early exploration, drafting, or internal thinking. As research moves from exploration into analysis, synthesis, and reporting, its limitations become harder to work around, particularly when findings need to be defensible, traceable, and shared with confidence.

2) Claude

General-purpose AI with strong text handling, but similar constraints

Claude is a large language model developed by Anthropic and is often used as an alternative to ChatGPT. Similarly to ChatGPT, it may be used for summarising long transcripts or working through dense qualitative material. Its large context window makes it appealing for early passes across interviews or open-ended survey responses. It is not designed around qualitative research workflows.

Like other general-purpose AI tools, Claude operates through conversational prompts rather than structured projects. It does not provide native support for coding, project-level analysis, or clear links between themes and source material. Any outputs need to be manually checked, contextualised, and tied back to the data before they can be treated as research evidence, which limits its usefulness beyond early exploration.

Data handling with Claude depends on plan level and contractual setup. By default, data is processed within Anthropic’s cloud infrastructure, and avoiding model training or tightening retention typically requires enterprise agreements. While Anthropic offers strong safety and privacy commitments, UK-only data residency is not a default configuration. As a result, Claude is most commonly used as an exploratory aid or drafting tool rather than a primary environment for qualitative analysis, where data control and auditability are critical.

4) NVivo

Legacy qualitative analysis software with limited AI automation

NVivo is a long-established Computer-Assisted Qualitative Data Analysis Software (CQDAS) platform used across academic and commercial research. It provides structured tools for coding, categorisation, querying, and audit trails, making it suitable for rigorous qualitative methodologies where transparency and defensibility matter.

More recent versions include some automated coding and AI-assisted features, but these remain limited in scope compared with newer AI-native platforms. Most analysis in NVivo is still manual, and scaling work across large datasets can be slow. Users frequently point to a steep learning curve, interface complexity, and performance issues as datasets grow.

NVivo continues to be valued where auditability and methodological control are priorities. It was not designed for modern AI-driven workflows or continuous, high-volume qualitative data, which can make it feel heavy for teams working at pace.

5) ATLAS.ti

Legacy CAQDAS tool with manual-first workflows

ATLAS.ti is an established qualitative analysis platform used across academic, social, and market research. It supports manual coding, memoing, querying, and visualisation across text, audio, and video data, giving researchers a high level of control over how analysis is conducted.

Although ATLAS.ti has introduced some automation and AI-supported features, the core workflow remains manual. Analysis is driven by researcher-applied codes rather than AI-assisted synthesis, and working at scale can be time-consuming. Users frequently mention the effort involved in working with the interface as a practical limitation, particularly during extended or more involved projects. One person on Capterra stated, “It is a software that requires time and testing exercises to understand how each of the tools works.”

While ATLAS.ti remains well-suited to traditional qualitative methodologies, it currently offers limited support for AI-driven pattern detection or continuous analysis across large, fast-moving qualitative datasets.

6) Anthropic

AI provider rather than a qualitative research platform

Anthropic is the company behind Claude and related AI models. It does not offer a standalone qualitative research tool. Instead, it provides large language models that organisations can access via APIs or chat interfaces, or embed within their own internal systems.

This means Anthropic supplies the underlying AI engine rather than the research environment. Teams that want to use Anthropic models for qualitative analysis must build or maintain the surrounding infrastructure themselves, including project management, data storage, retrieval, traceability, and governance controls. This approach is typically only viable for larger organisations with dedicated engineering resources.

Anthropic’s enterprise offerings allow organisations to prevent model training on their data and adjust retention policies, which is important for sensitive research. However, these protections usually require enterprise contracts, making them less accessible to individual researchers or small agencies. UK-only data residency is also not a default configuration.

As with other general-purpose AI providers, Anthropic’s models do not include built-in support for qualitative research workflows such as coding, audit trails, or project-level analysis. They function as powerful components that can drive internal systems, rather than as end-to-end solutions for qualitative research.

What qualitative researchers say about these tools

Each of these tools is designed to contribute something to qualitative research. The perspectives that follow come from people relying on them in real research work. While many praise the tools, there are some limitations which come up across multiple platforms.

ChatGPT

Researchers frequently caution against relying on general AI models for in-depth qualitative analysis. While basic tasks such as tagging or transcription can be useful, confidence in more complex analytical outputs is low.

“At this point, using AI tools for analysis is a bit sketchy. Tagging, translating, and transcription all seem pretty good. Anything more in-depth is sus… I have seen grandiose hallucinations, flat-out data invention, and very clumsy ‘insights.’ One day AI might be up to the task. Right now, it isn’t.”

Claude

There are fewer tool-specific public reviews of Claude in qualitative research contexts, but researchers often apply the same cautions to Claude and similar large language models.

“You need to use newer dedicated AI tools…, which are purpose-built for qualitative data analysis. Relying on ChatGPT or generic AI models alone won’t get you strong results… It will probably give you quotes that are hallucinated, and you won’t know how the themes are generated.”

NVivo

User reviews of NVivo on G2 consistently highlight its analytical rigour alongside usability challenges, particularly as projects scale.

“While NVivo is powerful, it does have a steep learning curve; it takes time to get used to its interface and fully leverage its wide range of features.”

“The software can be resource-heavy, which slows down performance if the dataset is large.”

ATLAS.ti

Feedback on ATLAS.ti reflects similar themes with NVivo, with users valuing manual control but reporting friction when working across larger or more complex datasets.

“While it is great for handling engineering tasks it is difficult to sort data with and to use for other purposes. It can be difficult to sort tasks and repurpose the filter features.”

Comparing AI tools for qualitative research workflows

The table below summarises how different AI tools perform in qualitative research workflows, based on how researchers actually use them and where they most often run into limitations.

| Tool | Specifically for qualitative research? | Best for in a qual workflow | Common drawbacks researchers flag | Research-grade fit |

| Beings | Yes | Project-based qualitative analysis where data control and traceability matter | Public review volume is still limited compared with long-established tools, so most feedback is second-hand or vendor-led | Yes |

| ChatGPT | No | Rapid summaries, first-pass theme suggestions, internal ideation | Hallucinations and confidence without verification, weak traceability back to source | Partial |

| Claude | No | Handling long transcripts and long-form synthesis drafts | Not a qual workflow tool, plus data-use settings can be a governance headache outside enterprise | Partial |

| NVivo | Yes (legacy CAQDAS) | Rigorous manual coding, audit trails, defensible analysis | Learning curve, heavy performance demands, collaboration friction | Yes |

| ATLAS.ti | Yes (legacy CAQDAS) | Manual coding and structured analysis across datasets | Errors when datasets get large, manual-first effort | Yes |

| Anthropic | No (provider) | Enterprise-grade model access for teams embedding Claude into workflows | Consumer data policies have changed, most safety guarantees sit in commercial tiers | Not a tool on its own |

How to choose the right AI tool for qualitative research

Different AI tools are often used at different moments in a research workflow, but they are not interchangeable. General-purpose models such as ChatGPT and Claude are widely accessible and can feel helpful when teams are under pressure. In practice, they are not designed to support qualitative analysis. They lack project structure, traceability, auditability, and clear data governance, all of which are fundamental once research moves beyond early exploration.

Legacy qualitative software such as NVivo and ATLAS.ti continues to support rigorous, defensible analysis, particularly where audit trails matter. However, these tools were built around manual workflows and struggle to scale efficiently as qualitative datasets grow and timelines tighten.

For teams analysing qualitative data in real research contexts, especially where participant data, client accountability, or regulatory oversight are involved, purpose-built platforms are the only practical option. Tools designed specifically for qualitative research workflows are able to support sustained analysis, collaboration, and defensible reporting without relying on unsafe workarounds or manual re-validation.If you want to explore AI-assisted qualitative analysis in an environment designed for this kind of work, you can try Beings for free and see how it supports qualitative research without compromising data control, traceability, or analytical judgement.